Effect Size Matters

Omitting effect sizes in science reporting risks misleading the general public

When media coverage of scientific findings fails to mention the size of the effects reported, people tend to assume that the effects are large - large enough to have practical significance. As a result, people may sometimes end up wasting time and money on health, dietary, or educational interventions that have negligible effects at best.

This is the conclusion of a fascinating new paper by Audrey Michal and Priti Shah, published in the journal Psychological Science. The paper describes two studies. In the first, participants read about a new curriculum that boosts kids’ math performance, and then were asked how strongly they’d recommend the curriculum to schools. In the second study, participants read about a new energy bar that boosts people’s mental alertness, and were then asked how willing they’d be to purchase it.

The twist was that there were three versions of each article. In one, the effect was described as large. In another, it was described as small. And in a third version, no information was given either way about the size of the effect. Also, for the “Small Effect” article, some participants were explicitly prompted to think about the meaningfulness of the effect before evaluating the curriculum or energy bar, whereas the rest weren’t prompted.

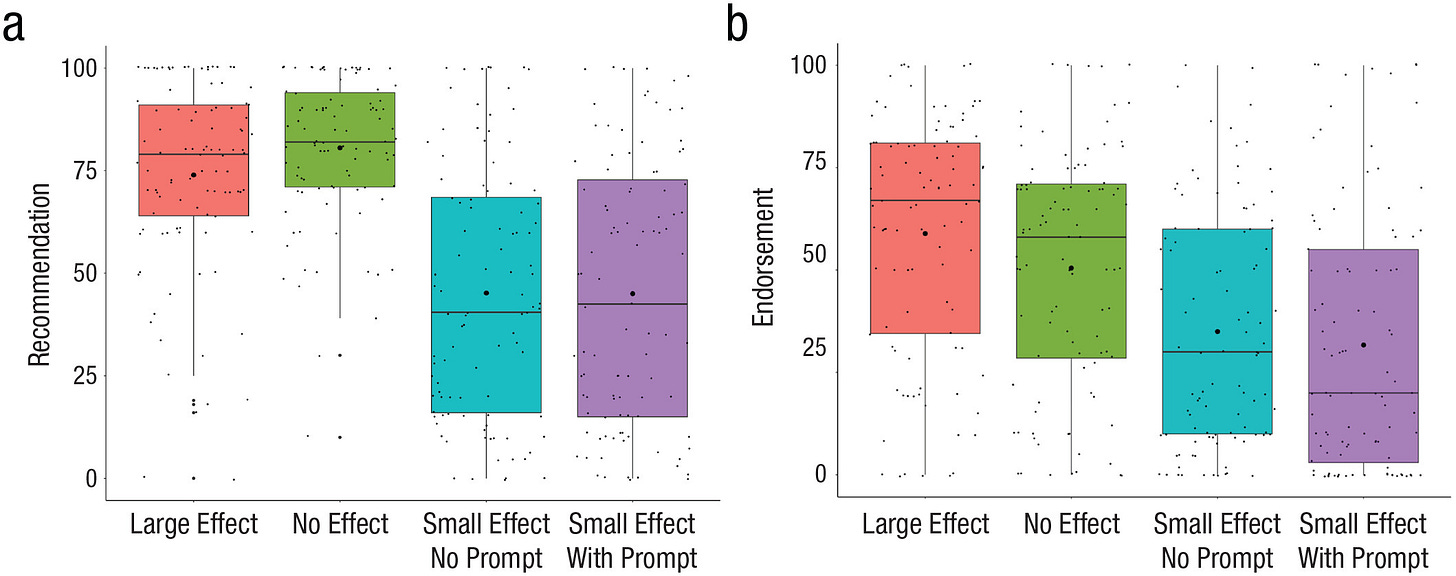

The graph below presents the results. Panel A shows people’s willingness to recommend the math curriculum (Study 1); Panel B shows people’s willingness to purchase the energy bar (Study 2).

As you can see, participants who were told nothing about the magnitude of the effect tended to assume that the effect was large, with no significant difference between the “Large Effect” and “No Effect Mentioned” conditions. This points to an important bias in how people interpret media coverage of scientific findings: Unless we’re specifically told otherwise, we tend to assume - not unreasonably - that reported results will be of practical significance. As every scientist knows, however, that’s not always the case. The moral of the story, then, is that articles reporting on scientific studies should mention the size of any effect - especially if the effect is small.

Interestingly, prompting participants to think about the meaningfulness of the reported effects had no significant impact. Whether prompted or not, participants were much less likely to endorse an intervention with a small effect than a large one.

Or at any rate, this was the case for the group as a whole. Digging into the details, however, Michal and Shah found that the better people’s math skills were, the more responsive they were to information about the effect size. People with little in the way of mathematical chops were no less likely to endorse an intervention with a small effect than one where the effect was large. According to Michal and Shah,

These results suggest that intervention may be necessary to help individuals interpret the practical importance of findings with small effects. One promising approach may be to provide additional context, such as visualizations, analogies, concrete reexpressions, and benchmarks for comparison, which can improve the interpretability of unfamiliar measurements, very large numbers, and standardized effect size.

Anyway, it’s a great paper. If you’d like to read it, you can request a copy from the authors here.

Follow Steve on Twitter/X.

Thanks for reading - and if you enjoyed the post, please feel free to spread the word and help support my efforts to promote politics-free psychology!

This is a corollary of a phenomenon that has been documented by Daniel Kahneman that he calls, What You See is All There Is (WYSIATI). WYSIATI a cognitive bias that when presented with evidence, especially those that confirm your mental model, you do not question what evidence might be missing and you fill in the missing data with the easiest thing that comes to mind.

It would be more effort for readers of these articles to reason that the effect might be large, or small, and so they should not jump to conclusion, and the obvious conclusion is that the article would not have been written if the effect was small, so they would act as if the effect was large, unless told otherwise.